Adam, 44, only bought shares in one other company when he decided to invest in a stock called Nvidia last month after a “hot tip” from a friend.

“It’s artificial intelligence and clearly there’s money in it,” says Adam, who works in hospitality in London and asked not to be named because his family is unaware of his stock trading.

While struggling to remember the company’s name, how to pronounce it (it’s en-vid-iya) or even exactly what it does in artificial intelligence, “this is the future, it’s a Cyberdyne Systems thing,” Adam says, referring to the global company artificial intelligence from the Terminator movies. “People are a little dazzled.

If Nvidia is only now capturing the popular imagination, it has long held Wall Street’s attention. The 31-year-old chip maker surpassed Apple and Microsoft this week to briefly become the world’s most valuable company, worth up to $3.3 trillion.

Explosive demand for GPUs, widely seen as the best way to build large AI systems like Meta and Microsoft, has boosted the stock price by around 700 percent since the launch of OpenAI’s ChatGPT chatbot in November 2022. .

The company’s unprecedented rise, until recently unknown to most outside the tech industry, reflects the artificial intelligence fervor that has gripped Silicon Valley and Wall Street alike. But its return to third place after just a few days underlines the stiff competition in this new technical arena.

Nvidia’s rise is the story of the AI economy: its explosive growth, its appeal to investors, and its unpredictable future. Where it goes next is set to reflect—and perhaps dictate—the path of this economy.

Lastly, the company with a brand as relatively obscure as Nvidia occupied that position in March 2000, when Cisco, which makes networking equipment, overtook Microsoft at the height of the dotcom bubble.

Now, as then, companies are investing billions of dollars in building the infrastructure for a promised revolution not only in computing but also in the global economy. Like Nvidia, Cisco struck gold by selling digital picks and shovels to Internet prospectors. But its share price never returned to its 2000 peak after the bubble burst later that year.

The fact that Big Tech’s surge in AI capital spending is based on revenue projections rather than actual returns has raised fears of history repeating itself.

262%Nvidia’s year-over-year revenue growth in the last quarter

“I understand the concerns,” says Bernstein analyst Stacy Rasgon, but there are important differences. “Cisco was concerned that they were building a lot of capacity for the demand they were hoping for, and even today there’s fiber buried in the ground that they never used.”

Rasgon adds that compared to Cisco’s price at the height of the dotcom bubble, Nvidia’s stock trades at a much lower multiple of projected earnings.

Companies like Microsoft are already seeing some return on their investment in AI chips, though others like Meta have warned it will take longer. If an AI bubble is forming, Rasgon adds, it doesn’t appear to be in imminent danger of bursting.

Cisco’s rise and fall in the dotcom era contrasts with Apple and Microsoft. The two tech companies have been competing for the top spot on Wall Street for years, not only by making highly successful products, but also by building platforms that support massive trading ecosystems. Apple said there are about 2 million apps in the App Store, bringing in hundreds of billions of developers each year.

Nvidia’s economics look very different from those surrounding Apple. In many ways, the popularity of a single app—ChatGPT—is responsible for much of the investment that has driven Nvidia’s share price higher over the past few months. The chipmaker says it has 40,000 companies and 3,700 “GPU-accelerated applications” in its software ecosystem.

Instead of selling hundreds of millions of affordable electronic devices to the masses every year, Nvidia has become the world’s most valuable company by selling a relatively small number of expensive data center AI chips to a handful of companies.

Major cloud computing providers such as Microsoft, Amazon and Google accounted for nearly half of Nvidia’s data center revenue, the company said last month. Nvidia sold 3.76 million of its data center GPU chips last year, according to chip analyst group TechInsights. That was still enough to get a 72% share of this niche market, leaving competitors like Intel and AMD far behind.

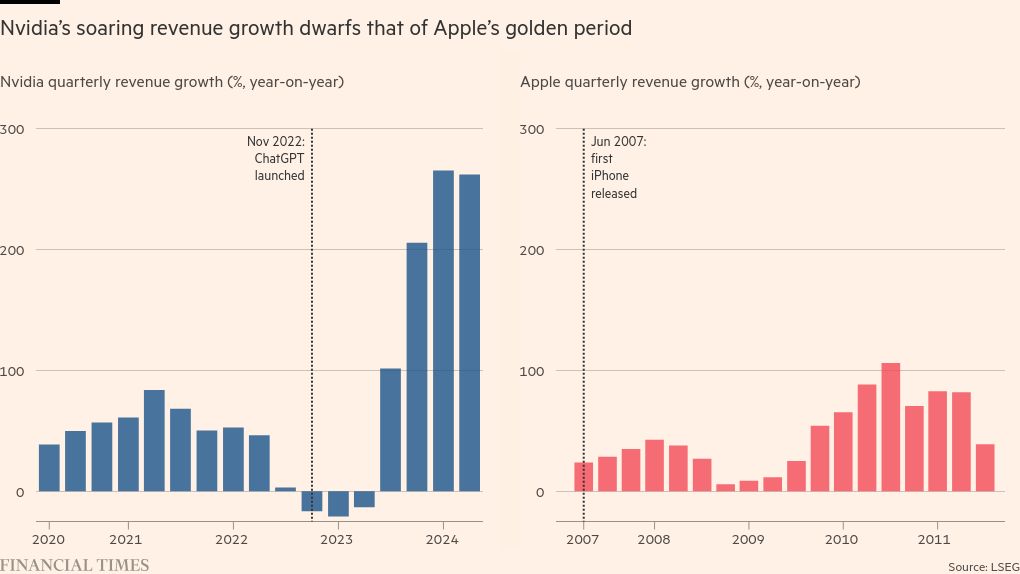

Yet these sales are growing rapidly. Nvidia’s revenue grew 262 percent year-over-year to $26 billion in the most recent quarter that ended in April, an even faster pace than Apple’s in the early years of the iPhone.

Demand for Nvidia’s products has been fueled by tech companies trying to overcome questions about AI capabilities by throwing chips at the problem.

In pursuit of the next leap forward in machine intelligence, companies like OpenAI, Microsoft, Meta and Elon Musk’s new xAI startup are racing to build data centers linking up to 100,000 AI chips together into supercomputers — three times the size of today’s largest clusters. Each of these server farms costs $4 billion in hardware alone, according to chip consultancy SemiAnalysis.

The hunger for more computing capacity for AI is unabated. Nvidia CEO Jensen Huang predicts that more than $1 billion will be spent in the coming years to rebuild existing data centers and build what he calls “artificial intelligence factories” as everyone from major tech companies to nation states create its own artificial intelligence models.

That scale of investment it will only continue if Nvidia’s customers figure out how to monetize AI themselves. And just as the company has soared to the top of the stock market, more people in Silicon Valley are beginning to question whether AI can live up to the hype.

David Cahn, a partner at Sequoia, one of Silicon Valley’s biggest startup investors, warned in a blog post this week about the “speculative frenzy” surrounding AI and the “delusion” that “we’re all going to get rich quick” from advanced AI and Nvidia’s chip stocks.

While predicting huge economic value from AI, Cahn estimates that big tech companies will collectively need to generate hundreds of billions of dollars more annually in new revenue to recoup their investments in AI infrastructure at the current accelerating pace. With companies like Microsoft, Amazon Web Services and OpenAI, incremental sales from generative AI are expected to run into the single-digit billions this year.

The era when tech executives could make grandiose promises about AI capabilities is “coming to an end,” says Euro Beinat, global head of AI and data science at Prosus Group, one of the world’s largest technology investors. “Over the next 16 to 18 months there will be a lot more realism about what we can and can’t do.”

Nvidia will probably never be a mass consumer company like Apple. But analysts say if it is to continue to thrive, it needs to emulate the iPhone maker and build a software platform that connects business customers to the hardware.

“The argument that Nvidia won’t just drop out and become Cisco — once the hardware hype cycle wears off — has to be tied to the software platform,” says Ben Bajarin of Silicon Valley-based consultancy Creative Strategies.

Huang has long argued that Nvidia is more than just a chip company. Instead, it provides all the ingredients to build “a whole supercomputer,” he said. This includes chips, networking equipment and its Cuda software, which allows AI applications to “talk” to its chips and is considered by many to be Nvidia’s secret weapon.

In March, Huang introduced Nvidia Inference Microservices, or NIM: a set of off-the-shelf software tools for businesses that more easily apply AI to specific industries or domains.

Huang said these tools can be thought of as an “operating system” for running large language models like those that support ChatGPT. “I think we’re going to be manufacturing NIM at a very large scale,” he said, predicting that its software platform — dubbed Nvidia AI Enterprise — “will be a very big business.”

Nvidia previously gave away its software for free, but now plans to charge companies to deploy Nvidia AI Enterprise at a cost of $4,500 per GPU per year. The effort is critical to bringing in more corporate or government customers that lack the big tech company’s native AI expertise.

The problem for Nvidia is that many of its biggest customers also want to “own” this relationship with developers and build their own AI platform. Microsoft wants developers to build on its Azure cloud platform. OpenAI has launched a GPT Store modeled after the App Store, which offers customized versions of ChatGPT. Amazon and Google have their own developer tools, as do AI startups Anthropic, Mistral, and many others.

That’s not the only way Nvidia is competing with its biggest customers. Google developed its own AI acceleration chip, the Tensor Processing Unit, and Amazon and Microsoft followed with their own. Although small in size, the TPU in particular shows that it is possible for customers to release their dependence on Nvidia.

In turn, Nvidia is cultivating potential future rivals to its Big Tech customers in an effort to diversify its ecosystem. It has pitched its chips to Lambda Labs and CoreWeave, cloud computing startups that focus on AI services and lease access to Nvidia GPUs, and is also pitching its chips to local players such as France’s Scaleway. multinational giants.

The moves are part of a broader acceleration of Nvidia’s investment activities in the emerging AI technology ecosystem. In the past two months alone, it has participated in funding rounds for Scale AI, a data labeling company that raised $1 billion, and Mistral, a Paris-based OpenAI rival that raised €600 million.

PitchBook data shows that Nvidia has closed 116 such deals over the past five years. In addition to potential financial returns, Nvidia’s stakes in startups are giving startups a first look at what the next generation of artificial intelligence might look like, helping to shape its own product roadmap.

“[Huang] he’s up to his neck in details about AI trends and what they might mean,” says Kanjun Qiu, executive director of AI research lab Imbue, which Nvidia backed last year. “He’s built a huge team to work directly with AI labs to understand what they’re trying to build, even if they’re not his customers.”

It’s this kind of long-term thinking that has put Nvidia at the center of the current AI boom. But Nvidia’s journey to becoming the world’s most valuable company has come with several near-death experiences, Huang said, and in the Silicon Valley market, no company is guaranteed to survive.