Cybersecurity researchers have discovered a very serious security flaw in the Vanna.AI library that can be exploited to achieve a remote code execution vulnerability using rapid injection techniques.

The vulnerability, tracked as CVE-2024-5565 (CVSS score: 8.1), is related to a fast injection case in the “ask” function that could be exploited to trick the library into executing arbitrary commands, supply chain security firm JFrog said.

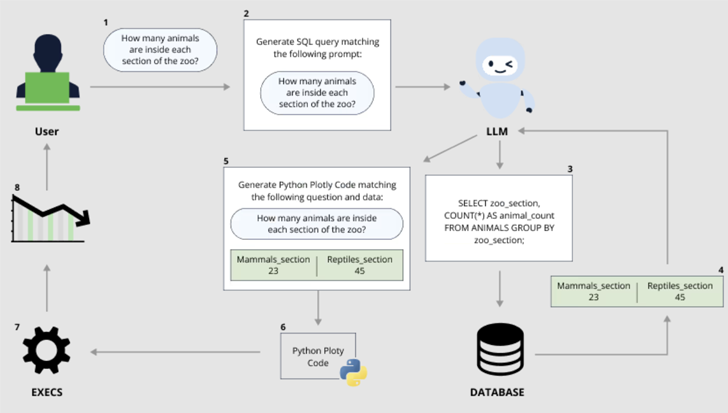

Vanna is a Python-based machine learning library that allows users to chat with their SQL database and gain insights by “just asking questions” (aka prompts) that are translated into an equivalent SQL query using a large language model (LLM).

The rapid adoption of generative artificial intelligence (AI) models in recent years has brought to the fore the risks of abuse by malicious actors who can weaponize tools by providing adversarial inputs that bypass the security mechanisms built into them.

One such prominent class of attack is fast injection, which refers to a type of AI jailbreak that can be used to ignore guardrails erected by LLM providers to prevent the production of offensive, harmful, or illegal content or the execution of instructions that violate the intended purpose of the application.

Such attacks can be indirect, where the system processes data controlled by a third party (eg incoming emails or editable documents) to launch a malicious payload that leads to an AI jailbreak.

They can also take the form of what’s called a multi-shot jailbreak or multi-turn jailbreak (aka Crescendo), in which the operator “starts with innocuous dialogue and gradually leads the conversation to an intended, forbidden target.”

This approach can be further extended to accomplish another new jailbreak attack known as Skeleton Key.

“This AI jailbreak technique works by using a multi-turn (or multi-stage) strategy that causes the model to ignore its railing,” said Mark Russinovich, CTO of Microsoft Azure. “Once the guardrails are ignored, the model will not be able to detect malicious or disapproved requests from others.”

Skeleton Key also differs from Crescendo in that once a jailbreak is successful and the rules of the system are changed, the model can generate answers to questions that would otherwise be forbidden regardless of the ethical and security risks involved.

“When a Skeleton Key jailbreak is successful, the model acknowledges that it has updated its guidelines and will subsequently follow the guidelines to create any content, no matter how much it violates its original responsible AI guidelines,” Russinovich said.

“Unlike other jailbreaks such as Crescendo, where models have to be asked for tasks indirectly or through coding, Skeleton Key puts models into a mode where the user can directly request tasks. Additionally, the model output appears to be completely unfiltered, revealing a range of the model’s knowledge or ability to produce the desired content.”

JFrog’s latest findings – also independently published by Tong Liu – show how rapid injections can have serious consequences, especially when coupled with command execution.

CVE-2024-5565 exploits the fact that Vanna facilitates text-to-SQL generation to create SQL queries that are then executed and graphically presented to users using the Plotly graphics library.

This is achieved using the “ask” function – e.g. vn.ask(“What are the top 10 customers by sales?”) – which is one of the main API endpoints to trigger SQL query generation. database.

The above behavior, coupled with Plotly’s dynamic code generation, creates a security hole that allows a threat actor to send a specially crafted challenge containing a command to be executed on the underlying system.

“The Vanna library uses a prompt function to present visualized results to the user, it is possible to modify the prompt using prompt injection to run arbitrary Python code instead of the intended visualization code,” said JFrog.

“Specifically, enabling external input to the library’s ‘ask’ method with ‘visualize’ set to True (the default behavior) results in remote code execution.”

After responsible disclosure, Vanna released a hardening guide warning users that the Plotly integration can be used to generate arbitrary Python code and that users exposing the feature should do so in a sandbox environment.

“This discovery demonstrates that the risks of widespread use of GenAI/LLM without proper governance and security can have drastic consequences for organizations,” Shachar Menashe, senior director of security research at JFrog, said in a statement.

“The dangers of rapid injection are still not well known, but they can be done easily. Companies should not rely on pre-challenges as a foolproof defense mechanism and should use more robust mechanisms when connecting LLM to critical resources such as databases or dynamic code generation.”